Transfer Learning of Sensorimotor Knowledge for Embodied Agents

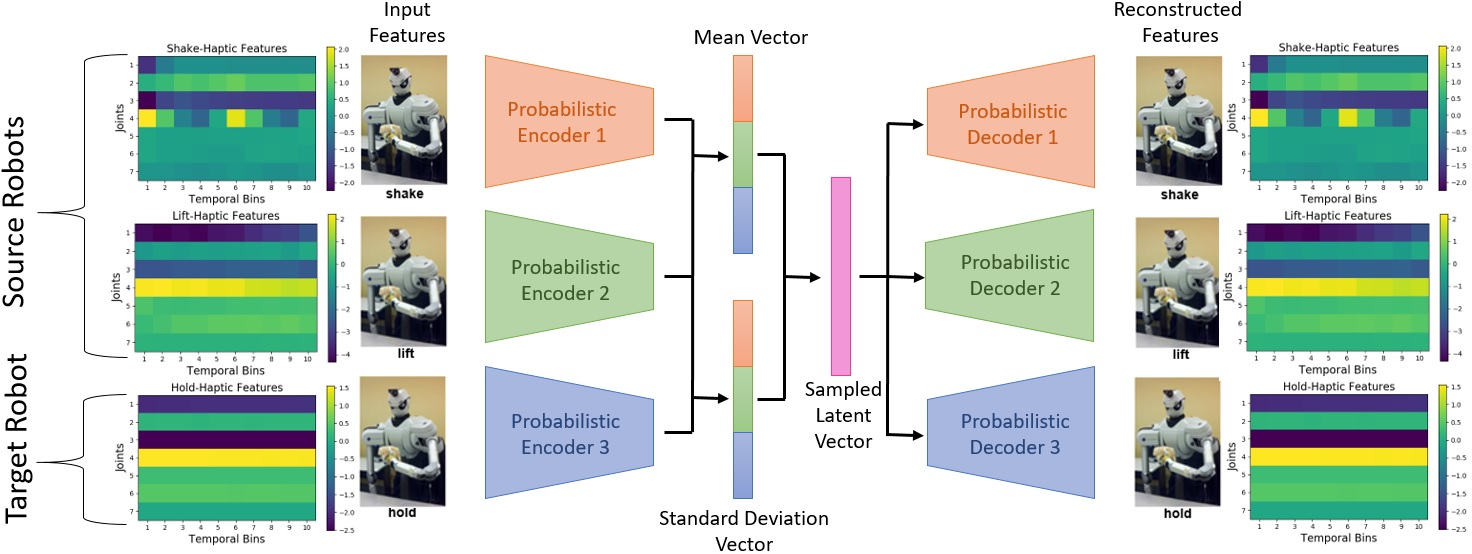

Prior work has shown that learning haptic, tacile and auditory models of objects can allow robots to perceive object properties that may be undetectable using visual input alone. However, learning such models is costly as it requires extensive physical exploration of the robot's environment and furthermore, such models are specific to each individual robot that learns them and cannot directly be used by other, morphologically differnet robots that use different actions and sensors. To address these limitations, our current work focuses on enabling robots to share sensorimotor knowledge with other robots as to speed up learning. We have proposed two main approaches: 1) An encoder-decoder approach which attemtps to map sensory data from one robot to another, and 2) a kernel manifold learning framework which taks sensorimotor observations from multiple robots and embeds them in a shared space, to be used by all robots for perception and manipulation tasks.

Prior work has shown that learning haptic, tacile and auditory models of objects can allow robots to perceive object properties that may be undetectable using visual input alone. However, learning such models is costly as it requires extensive physical exploration of the robot's environment and furthermore, such models are specific to each individual robot that learns them and cannot directly be used by other, morphologically differnet robots that use different actions and sensors. To address these limitations, our current work focuses on enabling robots to share sensorimotor knowledge with other robots as to speed up learning. We have proposed two main approaches: 1) An encoder-decoder approach which attemtps to map sensory data from one robot to another, and 2) a kernel manifold learning framework which taks sensorimotor observations from multiple robots and embeds them in a shared space, to be used by all robots for perception and manipulation tasks.

- Tatiya, G., Shukla, Y., Edegware, M., and Sinapov, J. (2020)

Haptic Knowledge Transfer Between Heterogeneous Robots using Kernel Manifold Alignment

In proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) [PDF] - Tatiya, G., Hosseini, R., Hughes, M., and Sinapov, J. (2020)

A Framework for Sensorimotor Cross-Perception and Cross-Behavior Knowledge Transfer for Object Categorization

Frontiers in Robotics and AI, Vol. 7., special issue titled ``ViTac: Integrating Vision and Touch for Multimodal and Cross-Modal Perception'', 2020 [LINK] [BIB]

Augmented Reality for Human-Robot Interaction

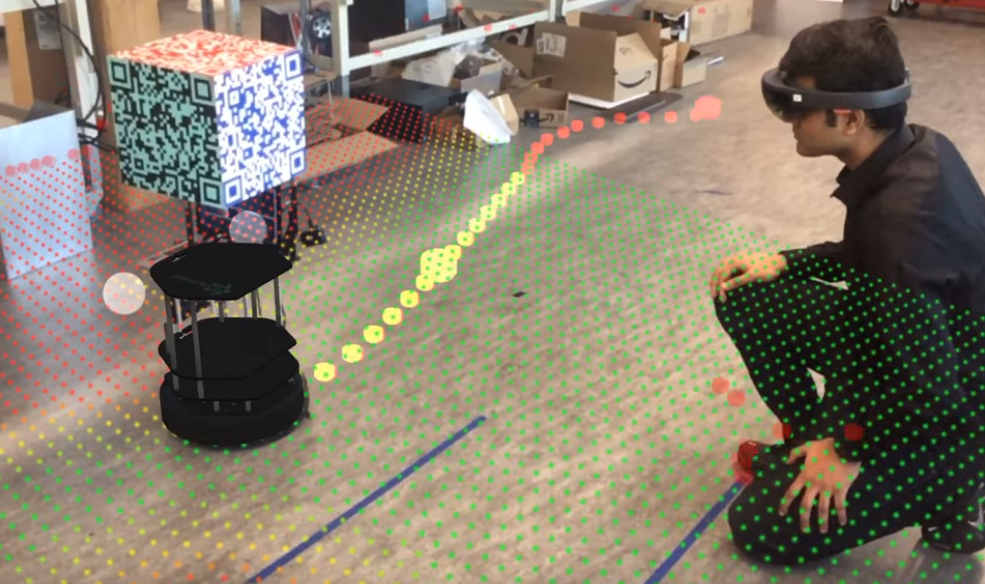

Establishing common ground between an intelligent robot and a human requires communication of the robot's intention, behavior, and knowledge to the human as to build trust and assure safety in a shared environment. Many types of robot information (e.g., motion plans) are difficult to convey to human users usinbg modalities such as language. To address this need, we have deloped an Augmented Reality (AR) system to project a robot's sensory and cognitive data in context to a human user. By leveraging AR, robot data that would typically be “hidden” can be visualized by rendering graphic images over the real world using AR-supported devices. We have evaluated our system in the context of K-12 robotics education where students leveraged this hidden data to program their robots to solve a maze navigation task. We are also conducting ongoing studies to examine how AR visualization can enhance human-robot collaboration in a shared task environment.

Establishing common ground between an intelligent robot and a human requires communication of the robot's intention, behavior, and knowledge to the human as to build trust and assure safety in a shared environment. Many types of robot information (e.g., motion plans) are difficult to convey to human users usinbg modalities such as language. To address this need, we have deloped an Augmented Reality (AR) system to project a robot's sensory and cognitive data in context to a human user. By leveraging AR, robot data that would typically be “hidden” can be visualized by rendering graphic images over the real world using AR-supported devices. We have evaluated our system in the context of K-12 robotics education where students leveraged this hidden data to program their robots to solve a maze navigation task. We are also conducting ongoing studies to examine how AR visualization can enhance human-robot collaboration in a shared task environment.

- Cleaver, A., Muhammad, F., Hassan, A., Short, E., and and Sinapov, J. (2020)

SENSAR: A Visual Tool for Intelligent Robots for Collaborative Human-Robot Interaction

In Proceedings of the 2020 AAAI Fall Symposium "Artificial Intelligence for Human-Robot Interaction" [PDF] - Cheli, M., Sinapov, J., Danahy, E., Rogers, C. (2018)

Towards an Augmented Reality Framework for K-12 Robotics Education

In proceedings of the 1st International Workshop on Virtual, Augmented, and Mixed Reality for Human-Robot Interactions (VAM-HRI), Chicago, IL, Mar. 5, 2018. [PDF] [BIB]

Grounded Language Learning

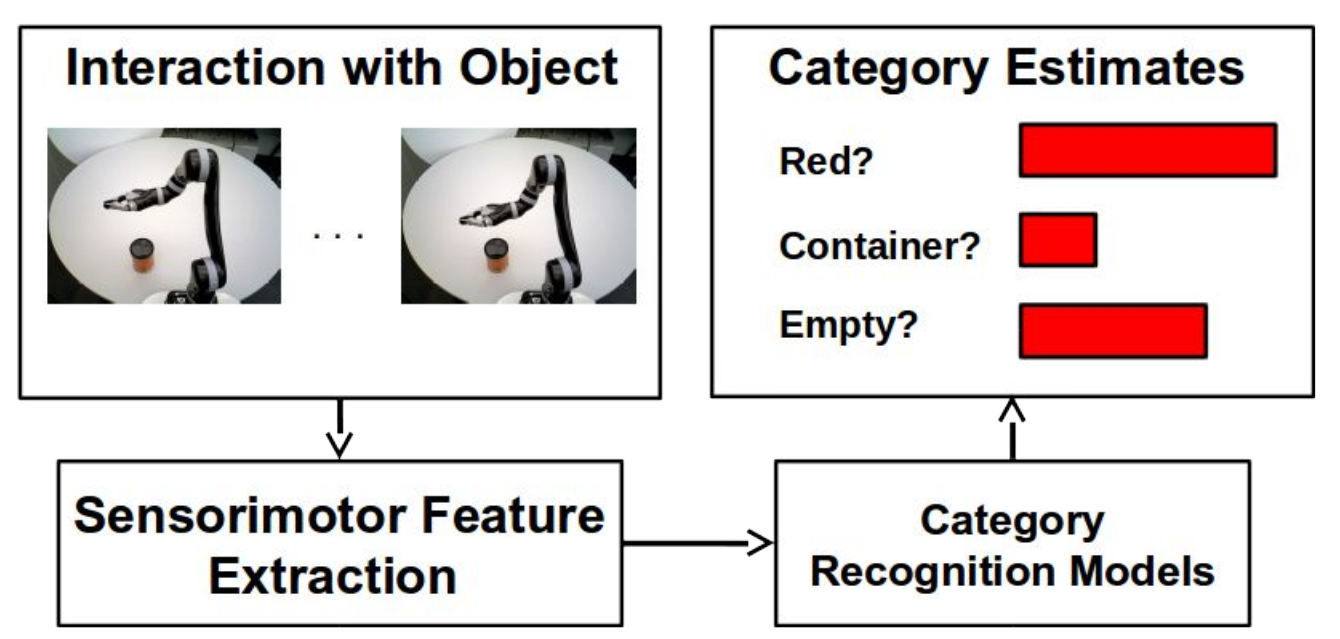

Grounded language learning bridges words like ‘red’ and ‘square’ with robot perception. The vast majority of existing work in this space limits robot perception to vision. In this project, we enable a robot to build perceptual models that use haptic, auditory, and proprioceptive data acquired through robot exploratory behaviors to go beyond vision. We investigate how a robot can learn words through natural human-robot interaction (e.g., gameplay) as well as how a robot should act towards an object when trying to identify it given a natural language query (e.g., "the empty red bottle"). Datasets from this research are available upon request.

Grounded language learning bridges words like ‘red’ and ‘square’ with robot perception. The vast majority of existing work in this space limits robot perception to vision. In this project, we enable a robot to build perceptual models that use haptic, auditory, and proprioceptive data acquired through robot exploratory behaviors to go beyond vision. We investigate how a robot can learn words through natural human-robot interaction (e.g., gameplay) as well as how a robot should act towards an object when trying to identify it given a natural language query (e.g., "the empty red bottle"). Datasets from this research are available upon request.

- Thomason, J., Padmakumar, A., Sinapov, J., Hart, J., Stone, P., and Mooney, R. (2017)

Opportunistic Active Learning for Grounding Natural Language Descriptions

In proceedings of the 1st Annual Conference on Robot Learning (CoRL 2017), Mountain View, California, November 13-15, 2017. [PDF] - Thomason, J., Sinapov, J., Svetlik, M., Stone, P., and Mooney, R. (2016)

Learning Multi-Modal Grounded Linguistic Semantics by Playing I, Spy

In proceedings of the 2016 International Joint Conference on Artificial Intelligence (IJCAI) [PDF]

Curriculum Learning for RL Agents

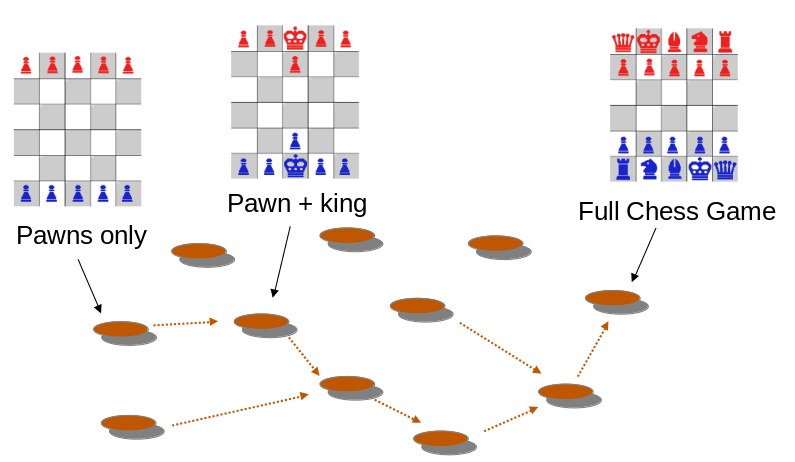

In transfer learning, training on a source task is leveraged to speed up or otherwise improve learning on a difficult target task. The goal of this project is to develop methods that can automatically construct a sequence of source tasks -- i.e., a curriculum -- such that performance and/or training time on a difficult target task is improved.

In transfer learning, training on a source task is leveraged to speed up or otherwise improve learning on a difficult target task. The goal of this project is to develop methods that can automatically construct a sequence of source tasks -- i.e., a curriculum -- such that performance and/or training time on a difficult target task is improved.

- Narvekar, S., Sinapov, J., and Stone, P. (2017)

Autonomous Task Sequencing for Customized Curriculum Design in Reinforcement Learning

In proceedings of the 2017 International Joint Conference on Artificial Intelligence (IJCAI), Melbourne, Australia, August 19-25, 2017. [PDF] - Svetlik, M., Leonetti, M., Sinapov, J., Shah, R., Walker, N., and Stone, P. (2017)

Automatic Curriculum Graph Generation for Reinforcement Learning Agents

In proceedings of the 31st Conference of the Association for the Advancement of Artificial Intelligence (AAAI), San Francisco, CA, Feb. 4-9, 2017. [PDF]